Latent Dirichlet Allocation Modeling of Performance Scales

A summary of our approach to using LDA to uncover the themes of performance scales.

R text analysis topic models LDAApproach

Once we gathered a sufficient number of scales from a variety of sources, we analyzed these scales using topic modeling to identify underlying themes from across the corpus. Latent Dirichlet Allocation (LDA) is a probabilistic topic modeling process which considers each document as a distribution of topics and each topic as a distribution of words. Using Dirichlet probability distribution, the model draws out the implicit themes across a set of documents. We choose LDA because it is a commonly used topic modeling process. We implemented LDA using the STM package in R. The goal was to see if the latent topics identified by the model were related to performance or the indicators of performance as outlined in Koopmans et al.

Choosing our Parameters

LDA works best with larger data sets, which presented a challenge for us. We took steps to optimize the size of our corpus for analysis. Typically, a standard set of stop words are removed from the corpus. Stop words are extremely common words which help to form the structure of sentences but do not inform the content. We did not remove these standard stop words for two reasons. First, to maximize our corpus. Second, because including pronouns such as “I” and “You” provides valuable conceptual information about the scale items, such as the level of analysis. Instead, we constructed a custom stop words list by examining the most frequent words in our data set.

Along with the most frequently occurring words, we also removed words that referred to specific jobs(e.g., nurse) and locations (e.g. Britain, Newfoundland).

##Number of Topics

In LDA topic modeling, the user inputs the number of topics for the model. Choosing this number of topics can be difficult, but the STM package provides guidance and tools for making this decision. The main measure of model strength and the desired number of topics is semantic coherence. Semantic coherence “is maximized when the most probable words in a given topic frequently co-occur together” ( Roberts et al). In addition to semantic coherence, STM also recommends using held-out likelihood, residuals, and exclusivity as measures of model fit. These graphs show the values of those variables on 10 LDA models, with the number of topics ranging from six to 24.

An ideal model would have a high held-out likelihood, a low level of residuals, and a combination of high semantic coherence and exclusivity. STM recommends looking at semantic coherence by exclusivity because semantic coherence can be “artificially” achieved with very common words among topics. This analysis suggests 12 as the optimal number of topics.

##Fitting the model

STM recommends running a model selection process for LDA models. Utilizing 12 topics, we produced multiple LDA topic models to find the most ideal model based again on semantic coherence and exclusivity of topics.

The model with the highest exclusivity and semantic coherence appears closest to the top right corner of the graph indicating model 1 is the best to continue with visualization.

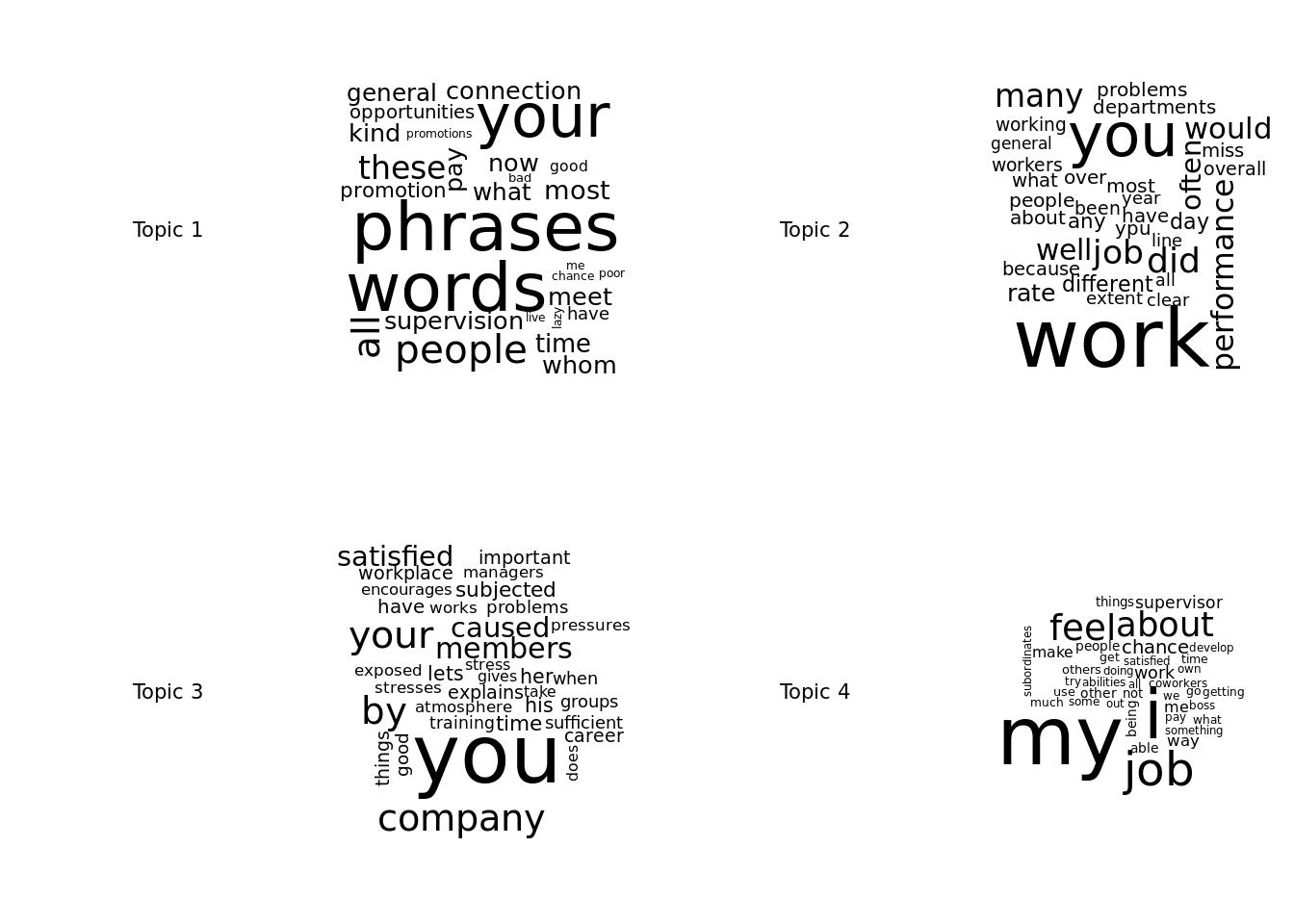

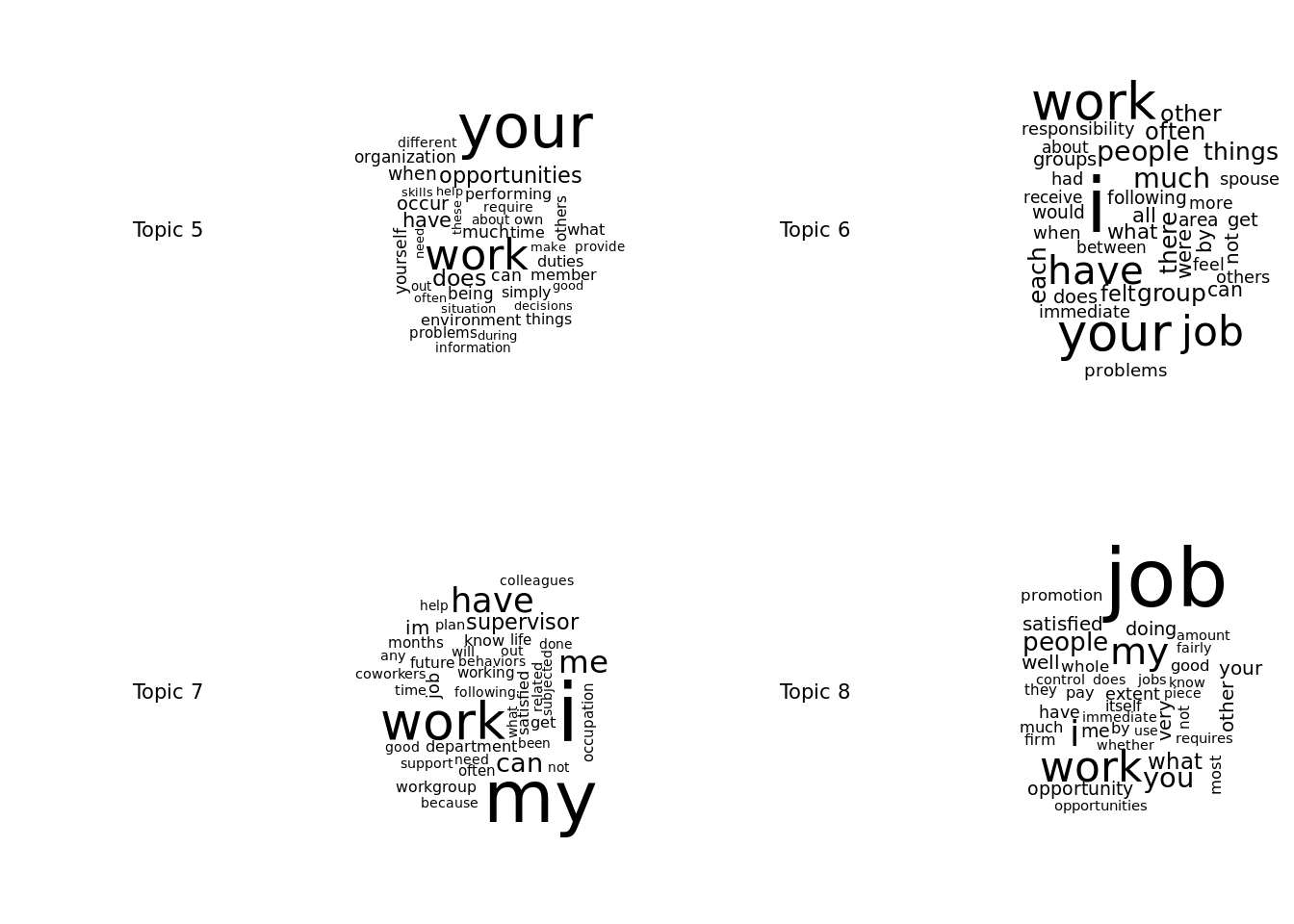

##Visualizations of the Topic Model

The outputs from the topic model reveal some of the latent themes in the job performance scales. We see themes related to ideal job characteristics, feelings about supervisors, satisfaction, and working in groups.

One way to visualize and understand topics in through word clouds. The graphs below represent word cloud clusters for each of the topics. The size of the word relates to how frequently it is represented in the topic.

##Conclusion

Although we do not necessarily see all aspects of our conceptual job performance framework in every topic, some do emerge from our analysis. With the further compilation of scales and the growth of the data set, this approach will likely yield valuable insights into the development of a framework of job performance specifically for the Army.