Appendix

HuggingFace.io

BERT Implementation Notes

All of the methods described above were implemented in python using the transformers library for pre-processing, classification, and named entity recognition. HuggingFace is a leading natural language processing startup that uses Transformers to solve sequence-to-sequence tasks while handling long-range dependencies with ease. HuggingFace/Transformers is a python-based library that exposes an API to use many well-known transformer architectures, such as BERT, RoBERTa, GPT-2 or DistilBERT, that obtain state-of-the-art results on a variety of NLP tasks like text classification, information extraction, question answering, and text generation. Those architectures come pre-trained with several sets of weights.

Additional Model Results: 2nd Place & Runner Up

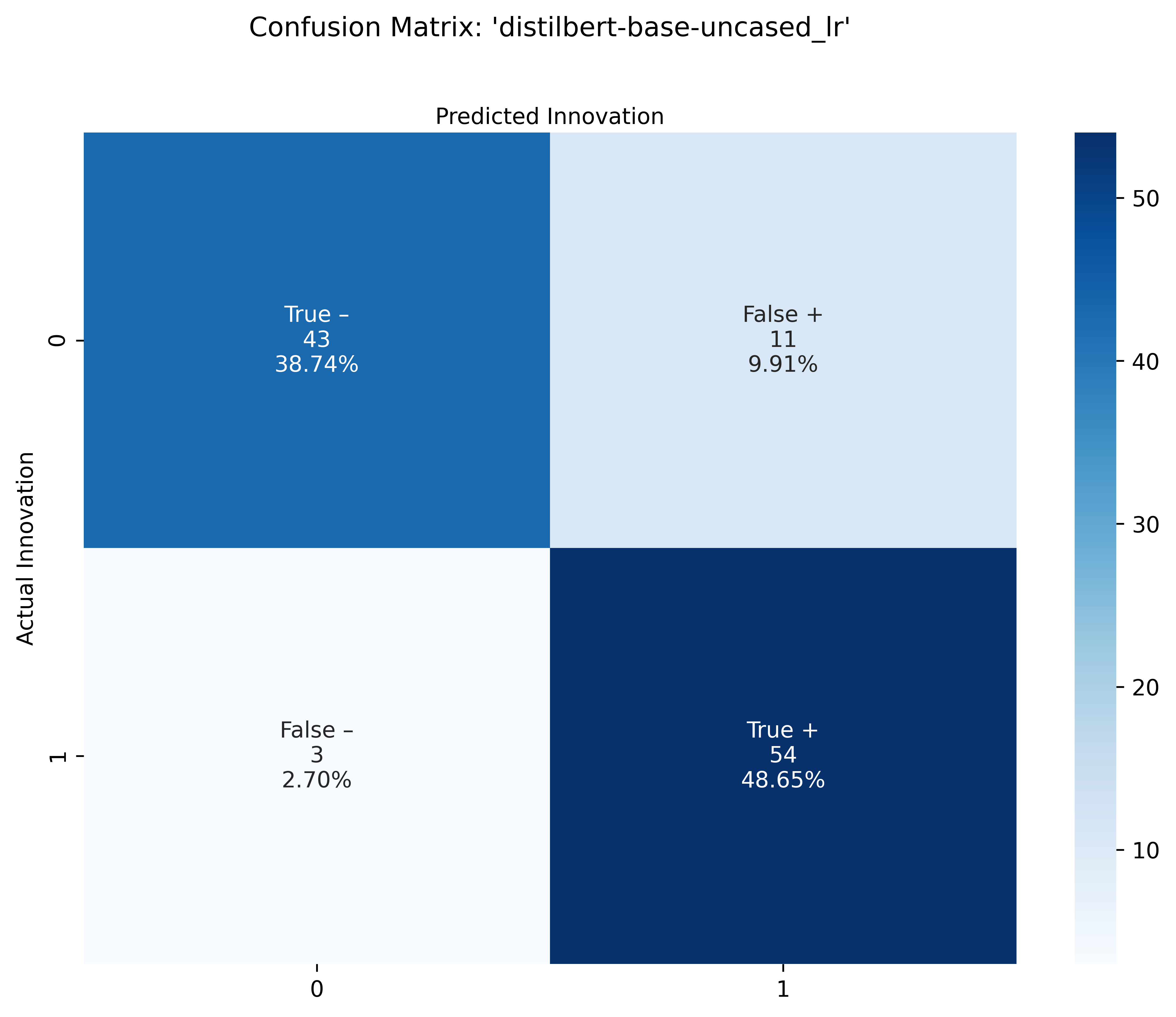

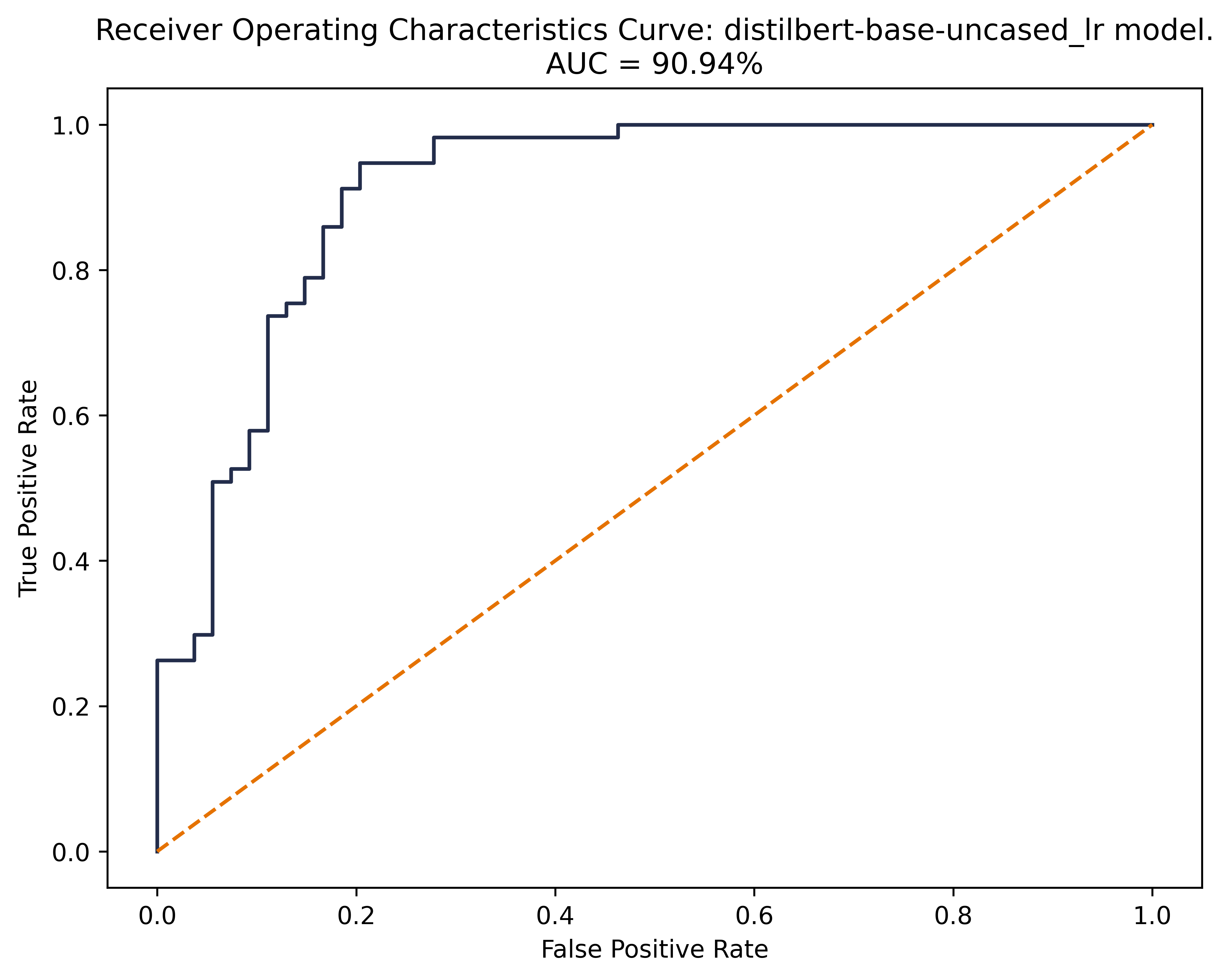

Distil-BERT Logistic Regression

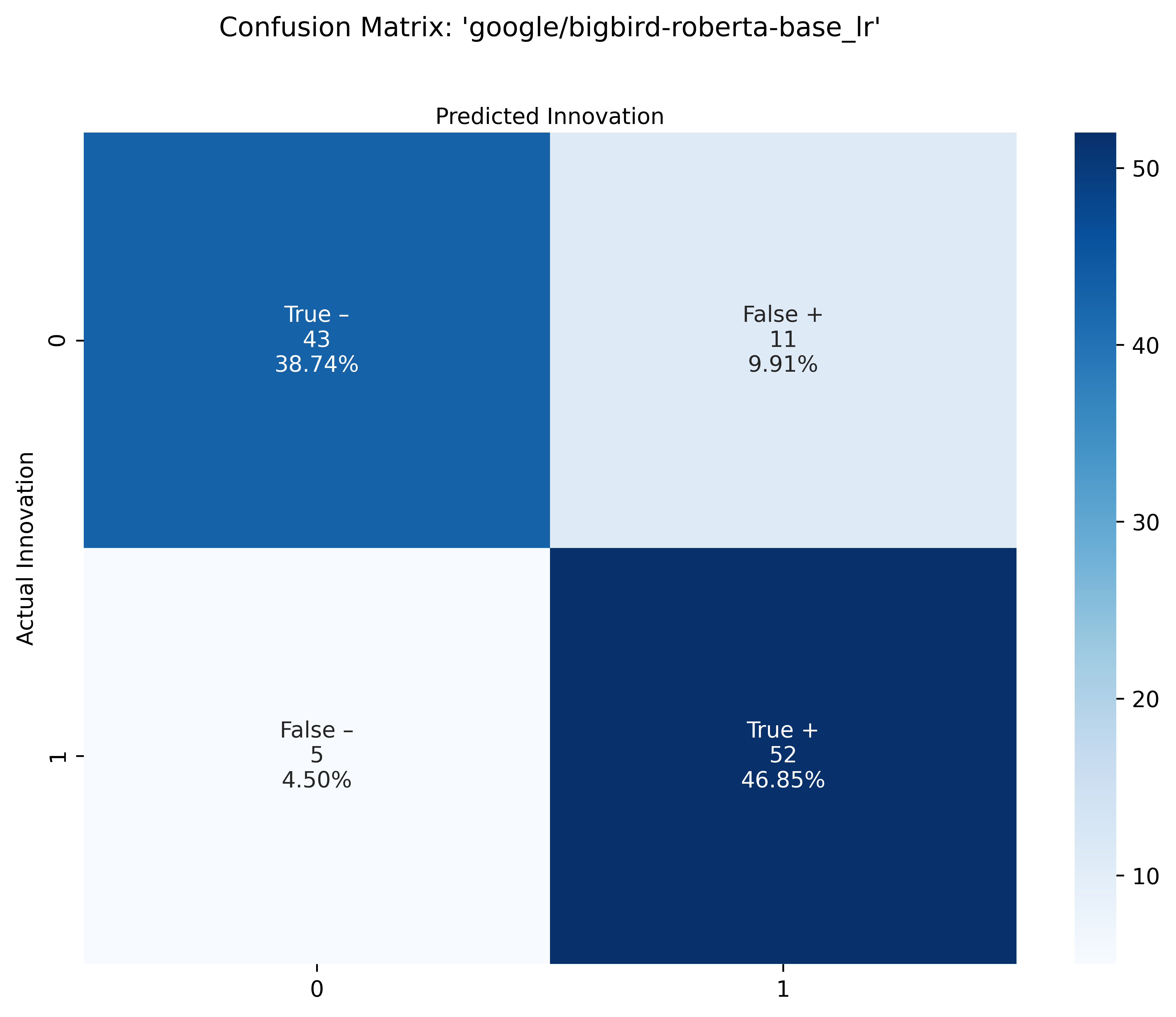

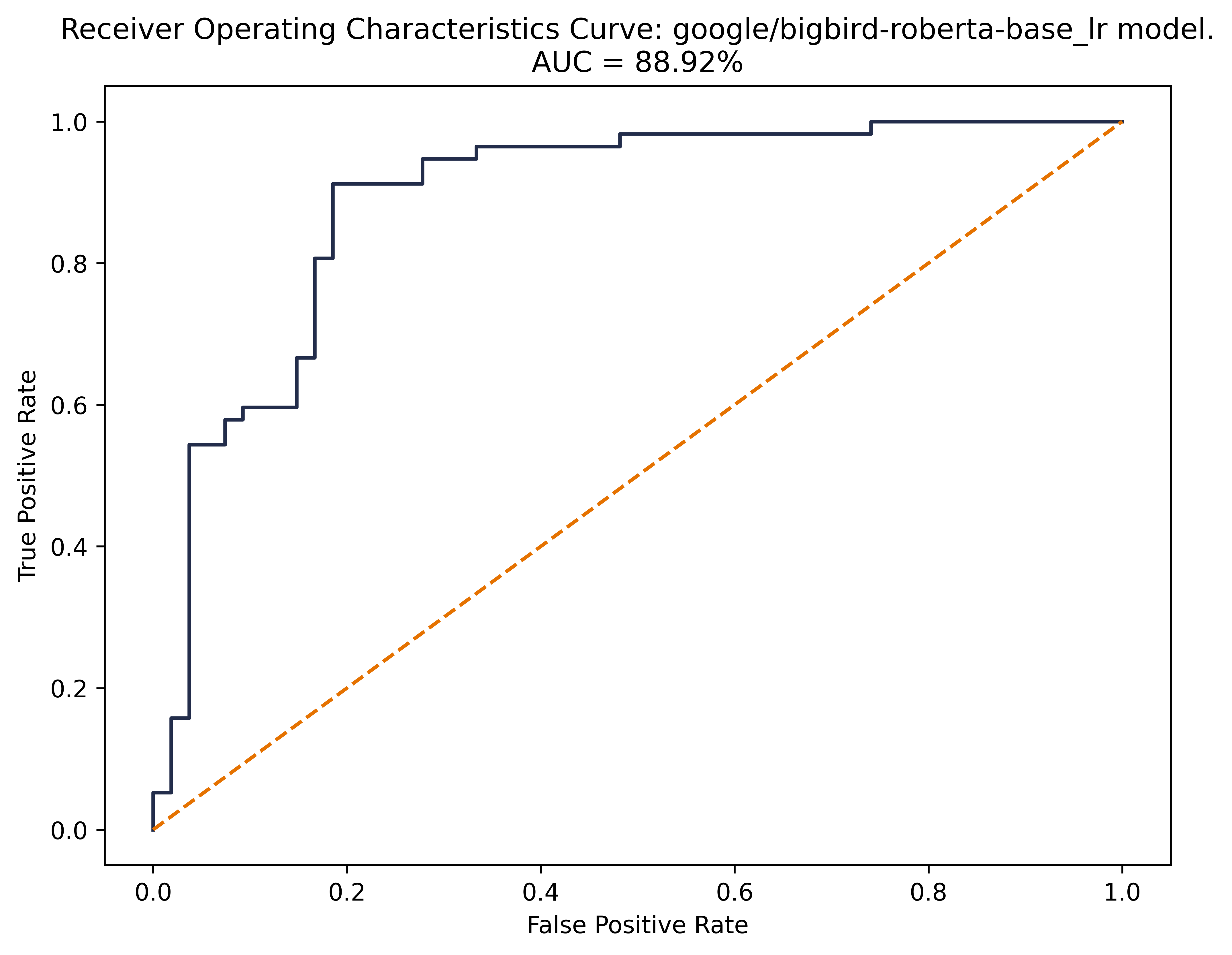

BigBird Logistic Regression